Sabina Sobhani is the Senior Director of Product for AI at Panorama Education. She leads the exploration and building of generative AI products for Solara with Class Companion.

AI is quickly becoming a part of everyday work in schools, helping teachers personalize support and make sense of student data. But not every AI tool is ready for the classroom. Even products designed for education can fall short if they haven’t been intentionally designed and tested for the realities of teaching and learning.

When AI produces inaccurate, biased, or confusing results, it does more than waste time. It erodes trust. Educators deserve to know that the tools supporting their students are safe and built for real classroom use. That’s why Panorama has invested deeply in designing, evaluating, and improving our AI-powered products to meet the highest standards of quality and fairness.

In this article, we discuss what trustworthy AI means at Panorama, how Panorama designs AI for K-12, and Panorama's research and evaluation process.

What Trustworthy AI Means at Panorama

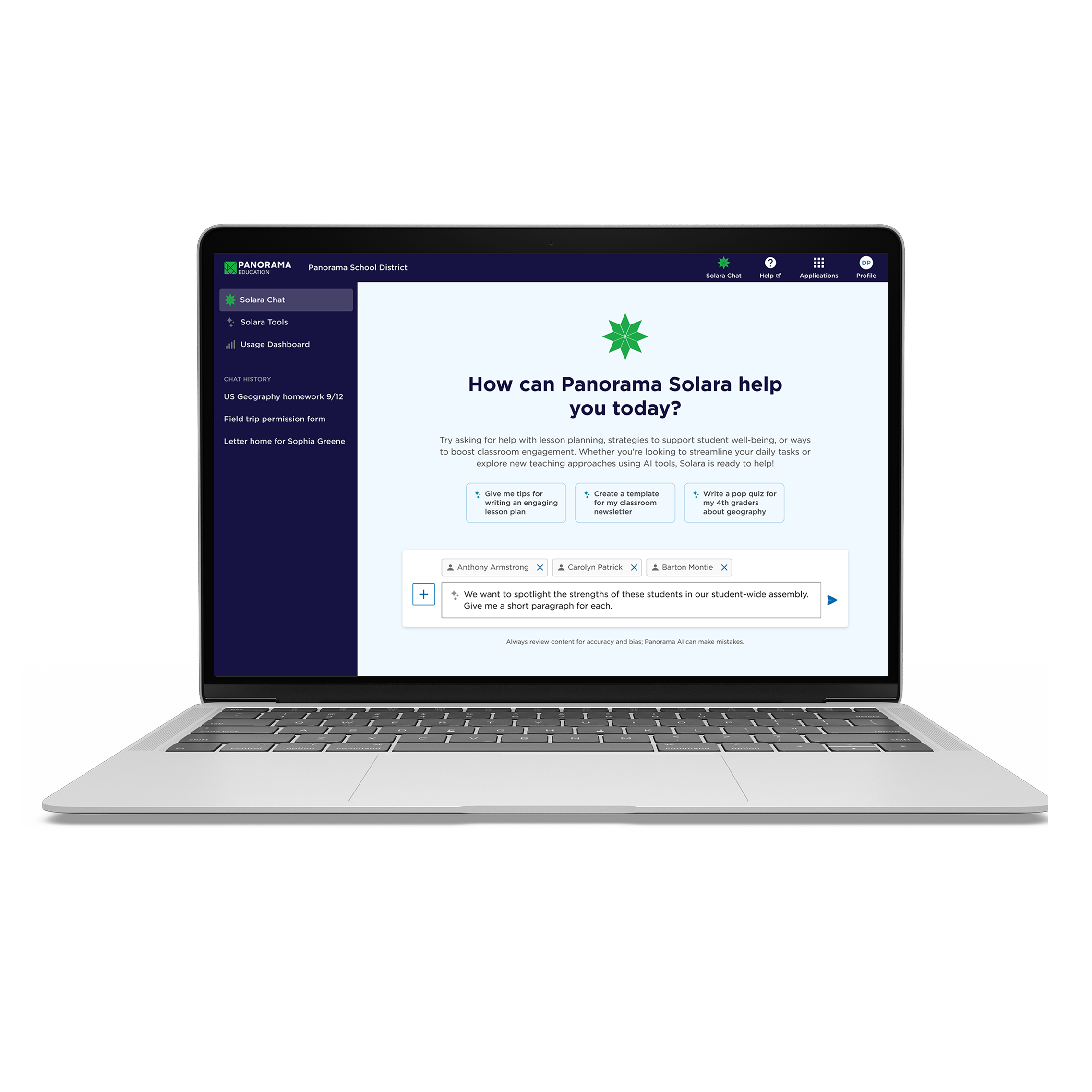

Panorama Solara, our customizable, district-managed AI platform, was designed with a core guiding principle: AI in schools must be both trustworthy and secure.

At Panorama, trustworthiness means two things:

-

Protecting student data through rigorous privacy and security standards, because AI is only effective when it can safely use real school data.

-

Ensuring the quality of AI outputs through continuous evaluation for accuracy, bias, and educational value.

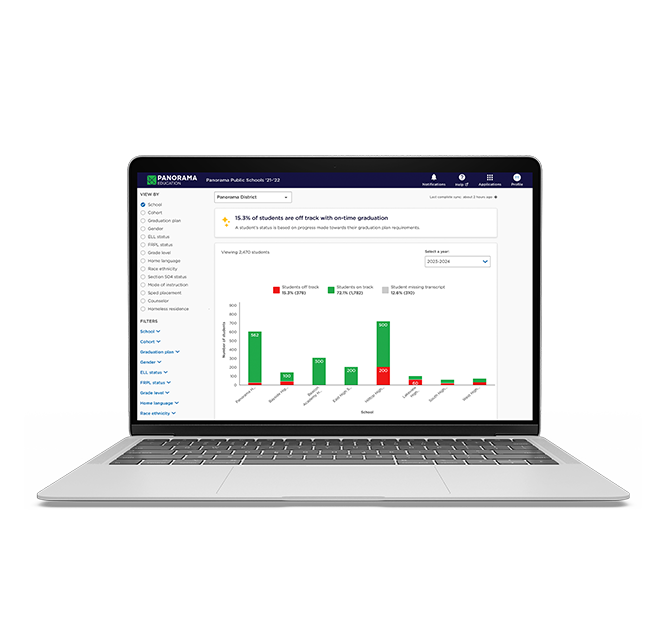

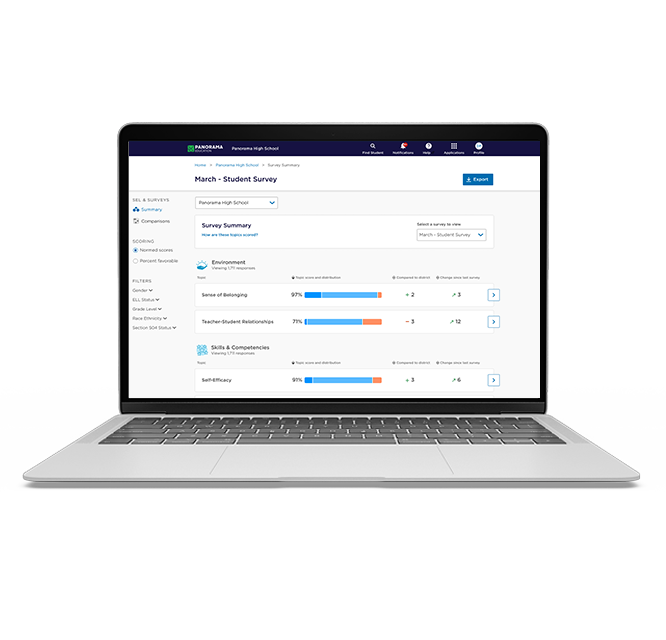

Our Data Science and Applied Research team rigorously evaluated Solara to ensure its insights are clear, fair, and aligned with the realities of teaching and learning. Educators should understand how AI tools reach their conclusions, especially when those insights influence student support decisions.

Here, we're discussing how Panorama designs and evaluates Solara to meet the highest standards of safety, accuracy, and fairness, and what district leaders should look for when assessing AI tools for their schools.

How Panorama Designs AI for K-12

Designing trustworthy AI for schools starts long before an educator ever interacts with it. Panorama Solara was built from the ground up for the K-12 environment, with safety, accuracy, and educational alignment embedded into every step of the process, from initial ideation to building and testing.

Security and Privacy by Design

In education, data security and privacy standards are critical for maintaining trust.

Panorama Solara was built to meet those expectations through a secure, closed infrastructure and strict compliance controls. Solara is designed with security and privacy at its core, operating within a secure infrastructure that keeps all data inside the Panorama ecosystem. Solara meets or exceeds SOC 2 Type 2, FERPA, and COPPA standards, and is 1EdTech certified for data privacy.

Solara integrates securely with student information systems, assessments, and Panorama’s own tools. Role-based access ensures teachers only see data for their own students, while district and school leaders can view aggregated insights to inform planning and support.

Safe and Responsible AI for Schools

Safety isn’t an afterthought in Solara. It’s built into the foundation. The model is guided by multiple layers of safeguards that keep every conversation focused on learning and student support.

- Educational boundaries: Solara’s system prompt limits responses to educational contexts.

- Content filtering: Built-in guardrails prevent unsafe or off-topic responses, powered by AWS Bedrock technology.

- Special education safeguards: Dedicated special education prompts ensure Solara never makes diagnostic or placement recommendations, helping educators use AI safely without replacing professional judgment.

- District customization: Districts can configure Solara with local terminology, approaches and content rules so the AI reflects their unique policies and practices.

Partnering with Anthropic for Ethical AI

Different AI models bring different perspectives on ethical use, data protection, and transparency, and those differences matter deeply in education. Schools need assurance that any AI system interacting with student or staff data is built with clear safeguards and responsible design choices. Without that foundation, even well-intentioned AI tools can introduce risks around privacy, fairness, and trust.

Panorama chose Anthropic and the Claude family of models because of their proven commitment to responsible AI development. Anthropic’s Responsible Scaling Policy prevents model releases until safety standards are met. Their Constitutional AI framework embeds explicit principles that guide the model to be fair, honest, and respectful.

With more than 100 internal security controls and strict data-handling policies, Anthropic’s infrastructure aligns with Panorama’s own privacy commitments. Anthropic does not use customer data for model training, an essential protection for districts managing sensitive student information.

Bias Reduction and Fairness

AI systems can sometimes reflect or amplify patterns in their training data, leading to inconsistent or biased results. In education, that’s a serious concern when accuracy and fairness directly affect students and staff.

Panorama Solara includes various measures to mitigate bias that can otherwise emerge in AI systems. For example, as one proven bias mitigation approach, Solara’s system prompts explicitly instruct the model that it must not discriminate or favor any group. This language is based on recent research showing that clear anti-bias phrasing in prompts can reduce biased responses. Combined with Anthropic’s fairness protocols, this approach helps ensure Solara remains consistent.

Minimizing Hallucinations

A common problem of other AI systems, in education and beyond, are “hallucinations,” where AI confidently provides information that is incorrect. In education, this can be particularly problematic. For example, an AI assistant that fabricates student data, misstates research findings, or provides inaccurate recommendations could lead to real-world consequences for students and educators.

Panorama Solara is specifically designed to minimize hallucinations and increase accuracy versus other tools. For example, to keep outputs grounded and dependable, Solara relies on structured prompt design and real data whenever possible. When educators upload documents or reference specific students, the model anchors its responses in those verified sources. Panorama’s Engineering team also conducts human review and quality assurance on Solara’s system prompts and configurations to detect and correct potential inaccuracies before release.

Each Panorama-developed prompt is built around educational best practices so that Solara reasons like an educator, not a generic chatbot. Informed by more than a decade of experience partnering with schools and districts nationwide, Panorama brings the same rigor and classroom perspective to Solara that has guided its work with educators for years.

This combination of data grounding, prompt structure, and continuous testing significantly reduces the risk of hallucinations in Panorama’s officially released tools.

Context Engineering

AI systems don’t just rely on data.. They rely on context. How information is framed and structured can dramatically affect the quality and relevance of an AI’s responses.

That’s why another critical component of Solara’s design is Context Engineering: the process of structuring and providing contextual information that shapes how the AI interprets and responds to educator prompts. In large language models, performance is deeply influenced by context: the framing of the prompt, the surrounding data, and the constraints that guide reasoning.

Panorama applies this principle intentionally in Solara by grounding AI interactions in verified educational data, structured system prompts, and well-defined role context. This ensures that Solara’s outputs are relevant to the realities of teaching and learning, not just linguistically accurate. By engineering context (not just content), Panorama helps Solara reason like an educator, respond with clarity, and stay aligned with the goals of K-12 instruction.

Continuous Evaluation and Improvement

Building a trustworthy AI system isn’t a one-time effort. It requires constant monitoring and refinement. Panorama continuously evaluates Solara to ensure it performs reliably in real educational settings.

Panorama’s Engineering team maintains an ongoing evaluation loop informed by field data, educator feedback, and internal testing. Solara’s performance is continuously monitored for accuracy, fairness, and instructional value, ensuring it evolves in response to the needs of schools and remains aligned with real-world applications. Each time new feedback is incorporated or updates are made, additional testing is conducted to validate performance and maintain quality across releases.

Additionally, Panorama has built a dedicated evaluation framework for monitoring and improving Solara’s AI output. Today, this framework focuses on fast, actionable feedback and core safety checks—assessing general response quality, handling of edge cases, and alignment with standards for tone, usefulness, and safety. Evaluation methods include a combination of automated pass/fail checks for rapid signal detection and human-in-the-loop (HITL) review for more nuanced evaluation of tone and clarity. This approach allows Panorama to move quickly while maintaining high standards of quality.

Evaluating AI for K-12: What We Learned from Testing Panorama Solara

Designing trustworthy AI is only half the equation. To ensure Solara performs as intended in real classrooms, Panorama’s Data Science and Applied Research team conducted a series of studies to rigorously evaluate its accuracy, clarity, and fairness.

How Do You Evaluate AI Models for Bias and Quality?

Measuring AI quality isn’t just about how the technology performs. It’s about how AI supports teaching and learning. When teachers use AI tools to support planning, communication, or intervention work, they’re making decisions that shape students’ learning experiences.

That’s why our data science team wanted to know: How can we measure whether an AI model produces responses that are clear, helpful, relevant, and fair?

To answer that, we conducted a series of studies that apply both human review and AI-as-judge testing. In the AI-as-judge approach, we use an LLM to evaluate model outputs across dimensions such as clarity, helpfulness, and bias. Our goal was to understand not only whether this method works, but also what it reveals about Solara’s performance in real educational scenarios.

What We Found

Solara’s responses are coherent, relevant, and helpful.

Across our testing, Solara consistently generated clear, evidence-based responses that reflect the tone, language, and judgment educators expect in school settings. These findings validate that Solara’s design and training process are translating into real-world reliability, showing that its insights can be trusted to support meaningful decisions in schools.

Solara demonstrated strong consistency across demographic groups.

Previous studies have shown that generic AI tools can reflect bias from both demographic and implicit factors. Examples include suggesting smaller salary offers for minorities and women, estimating different car values based on the owner’s race, and predicting that a woman is less likely to win a chess match.

In contrast, our research indicates that Solara, by design, avoids these biases and delivers consistent results across groups. Solara is designed to minimize bias from the very start by excluding demographic information such as race or gender from prompts whenever possible.

To assess potential bias, we tested how each model responded when given context that included names suggesting different demographic backgrounds. The Claude model, which powers Solara, showed high consistency and produced nearly identical responses regardless of names implying different races or genders.

This means that when two students with similar academic or attendance profiles receive support through Solara, educators can expect the same recommendations and insights, regardless of the student’s name, gender, or racial background. Few AI tools in education have undergone this level of internal bias testing, underscoring Panorama’s commitment to developing AI that is fair and trustworthy for all students.

AI-as-judge works.

We found that AI can reliably evaluate responses for clarity, helpfulness, relevance, and factual accuracy, producing results that closely align with human judgments.

To test this, we collected a series of real Solara chats and had human reviewers rate them on these same dimensions. We then asked large language models (LLMs) to rate the same chats using the same criteria and compared the results. The close alignment between the two sets of scores confirmed that AI-as-judge is a reliable method for evaluating Solara’s output quality. This gives us a scalable method to assess Solara’s performance over time, complementing ongoing human review and helping ensure educators receive accurate, actionable insights.

Building trust through evidence

These findings underscore an important point: many AI tools marketed for educational purposes are not thoroughly tested for accuracy, bias, or instructional value before being introduced to classrooms. Panorama’s research shows why this step is essential.

For district leaders, the takeaway is clear and critical: don’t just ask if an AI tool “works.” Ask how it’s evaluated. The most effective and trustworthy AI systems in education are those that demonstrate real evidence of accuracy, fairness, and reliability.

What This Research Means for District Leaders

AI in education is still relatively new territory for most districts, and it can be challenging to distinguish between claims and evidence. The results of Panorama’s evaluation work point to a larger truth: trust in AI begins with transparency. Districts need to see clear proof that an AI tool produces accurate, fair, and valuable results before putting it in the hands of educators.

When considering an AI solution, district leaders can use a few key questions to guide their review process:

-

How is the AI-powered tool built for education? Look for tools designed to address specific K-12 challenges and grounded in real school data, educator workflows, and classroom context, rather than relying on general-purpose AI models built for broad use.

-

How is it tested for quality and bias? Ask vendors to share their evaluation process, including whether they measure how the model performs with different types of student data.

-

What evidence can you share? The best partners are transparent about results. They can show how their models are reviewed and refined over time to reduce bias and improve reliability.

By asking these questions, district leaders can cut through the noise and identify partners who take both safety and effectiveness seriously.

Why This Matters

Many AI tools are built from general-purpose models and optimized for speed or broad commercial use, not for the realities of schools. Solara is different. It’s purpose-built for K–12 and rigorously tested to ensure accuracy, safety, and educational value, giving educators confidence that the insights they receive are both responsible and relevant.

Fairness is a critical part of that responsibility. In education, AI bias can cause allocation harms (when technology unfairly influences who receives opportunities or support) and quality-of-service harms (when an AI system performs better for some groups of students than others). By proactively testing Solara for these forms of bias, Panorama helps ensure that every educator and student benefits from AI that is consistently reliable and designed to serve all learners well.

Building Trustworthy AI for Schools

AI has the potential to transform how educators work, helping them make sense of complex data and act quickly on what students need. But that potential only matters if the technology is safe, secure, and reliable.

At Panorama, reliability starts with how we build and evaluate our tools. Every feature is tested, transparent, and safe for schools. From how Solara is developed to how it’s assessed for bias and accuracy, our goal is to make AI both powerful and responsible.

District leaders should expect that same level of transparency from any vendor they consider. When looking for a partner, ask how their AI features are developed, how they’re tested, and what evidence supports their claims. The right partner will be open about their process and ready to demonstrate how their approach works in schools.

Because in education, trust is the real measure of innovation.

![How AI Ready Is Your District? [Infographic]](https://www.panoramaed.com/hubfs/iStock-1912513615.jpg)